Plover is the world’s first free and open-source stenography software. But unlike traditional computer-aided (machine-shorthand) translation systems, which typically resemble sandboxed word processors, Plover lets you use your steno machine as an OS controller/input device. Each has its own user base and use cases and I’m not saying one is inherently better or worse than the other. They fundamentally serve different audiences. However, one of the benefits of Plover is that it unlocks a whole new world of single-stroke commands one can use to control their machine. Here’s my list of the top commands that I use nearly every day. Keep in mind that I use macOS. If you use Windows, for most commands, you can just substitute “ctrl” for when I use “command” and you’ll be good to go. An exhaustive list of all the commands Plover has to offer is available here.

1. Switching workspaces

When you have several workspaces on macOS and you want to switch between them quickly.

SKR-R = {#control(left)}

SKR-G = {#control(right)}

“switch to the workspace to the right” (mnemonic hook: “screen-⬅”)

“switch to the workspace to the left” (mnemonic hook: “screen-➡”)

*Think of the RPBG keys as standard keyboard arrow keys.

2. Go to the end/beginning of the document

Useful for when you’re making edits while captioning and the speaker starts talking again, or you just want to snap to the bottom of the document you’re working on.

SR-RZ = {#command(down)}

SR-FD = {#command(up)}

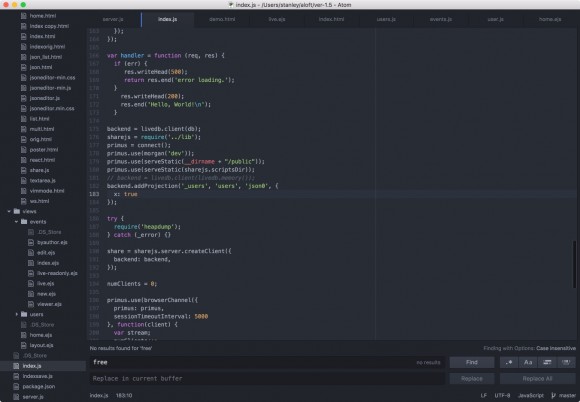

3. Git commands

TKPWHRA*UL (git add [all]) = {>}git add .

TKPWEUPLT = {>}git commit

TKPW*EUPLT = {>}git commit -m “{^}{-|}

TKPWHRURP = {>}git pull origin master

TKPWHRURB = {>}git push origin master

TKPWAUTS = {>}git status

4. One-stroke money formatters

When people say sums of money in ways that make you stop and think where to put the commas and periods, do these instead.

TKHR-RS = {*($c)}

“Turn the previous number into a correctly-formatted sum of money.”

3 + TKHR-RS becomes $3

4.5 + TKHR-RS becomes $4.50

.33 + TKHR-RS becomes $0.33

THO*UDZ = {^}000{*($c)}

“Turn the previous number into thousands of dollars.”

4 + THO*UDZ becomes $4,000

200 + THO*UDZ becomes $200,000

PH*LDZ = {^}{#option(left)}${#option(right)}million

“Turn the previous number into millions of dollars.”

7 + PH*LDZ = $7 million

*Use the same paradigm to do trillions of dollars (TR*LDZ) and billions of dollars (PW*LDZ).

5. Start lower with no space, start upper with no space.

When you want to suppress automatic spaces and want to control the case of the first letter of the output.

KPA* = {^}{-|}

“Start text where my cursor is currently located and capitalize the first letter.”

STHROER = {^}{>}

“Start text where my cursor is currently located and make sure the first letter is lowercased.

6. Special characters that need to be escaped.

Certain characters that are part of Plover’s dictionary syntax need to be escaped correctly to define them so that Plover doesn’t get confused and misinterpret the entry.

KHRURB (curly brace open) = \{{^}

{

KHRURBS (curly brace close) = {^}\}

}

PWHR*RB (backslash) = {^\^}

7. Comment slashes

\

KPHERBS (comment slashes) = {^}//

// (hi)

KPH*ERBS (comment slashes + cap next) = {^}//{-|}

// (Hi)

TAEUBL/TPHR-P = (╯°□{#Shift_L}°)╯︵ ┻━┻

(╯°□ °)╯︵ ┻━┻

*Note the required {#Shift_L} to prevent special-character encoding weirdness.

SKWHR*UG = ¯\_(ツ{#Shift_L}{^})_/¯

¯\_(ツ)_/¯

*Note the required {#Shift_L} to prevent special-character encoding weirdness.

8. Brightness up/down

PWR*P = {#MonBrightnessUp}

PWR*B = {#MonBrightnessDown}

*Again, think of the P and B as up and down on a standard keyboard arrow set.

9. Sound controls

SRAO*UP (volume up) = {#AudioRaiseVolume}

SRAO*UB (volume down) = {#AudioLowerVolume}

SRAO*UPLT (volume mute) = {#AudioMute}

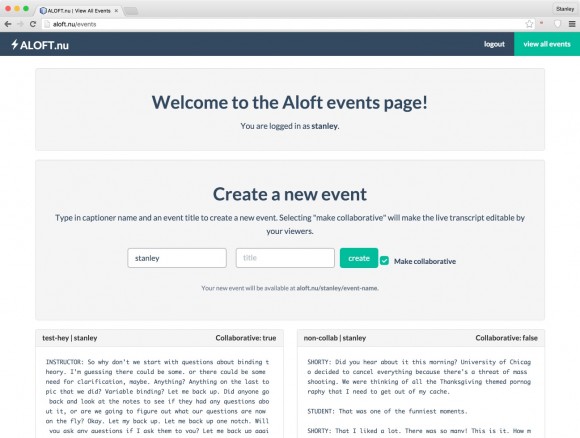

10. On-the-fly speaker definition

For when you didn’t create a speaker definition for someone beforehand but you want to still mark them by their name in the transcript rather than using chevrons (>>).

SPOEU = {*<}{^:}{#Alt_L(Left)}{^\n\n^}{#command(right)}{^ ^}{-|}

Inserts a new line, puts the last name (or any word that comes prior to the stroke) in all caps, adds a colon, and capitalizes the next character.

“Josh” becomes \nJOSH:(capitalize next)

11. Go to end of line & add a semicolon

TKHRAO*EUPB (go to end of line) = {#command(right)}

SKHROEUPB (add semicolon to end of line — used frequently in writing JavaScript) = {#command(right)}{;}

12. CamelCase

KPW-PBG (cap next word and attach it) = {^}{-|}

set KPW-PBG attribute becomes setAttribute

KPHAO*EL (cap the first word and the next word, attach them) = {*-|}{^}{-|}

word KPHAO*EL press becomes WordPress\

13. Add comma at end of line, make new line

Handy for writing JavaScript objects or working with JSON.

KPHAPBL (comma + new line) = {#right}{,}{^\n^}{^}

14. Indented parentheticals

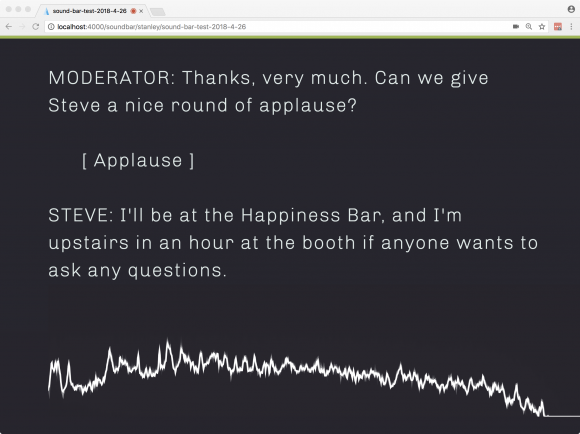

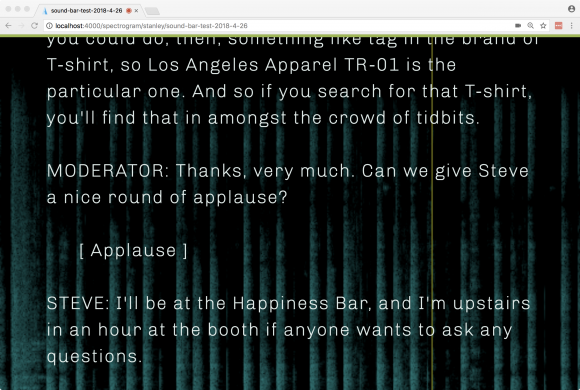

Used for captioning to mark things like laughter or applause.

KHRAFT (laughter) = {^\n^}{^\n^}{^\t^}{^}[ Laughter ]{-|}

Adds a new line, indents one tab and adds [ Laughter ].

15. Dot-slash

Used for pointing to the current working directory in bash.

TKHR*RB (dot slash) = ./{^}

16. “S” is for “sticky (parentheses & brackets)

Useful for writing function declarations and array pointers.

SPR-PB = {^}({^}

SPR-PBD (paren, double) = {^}()

SPWR*BG = {^}[{^}

So these are a few of the most important ones that I use for now. I’ll keep adding to this list as I think of more!

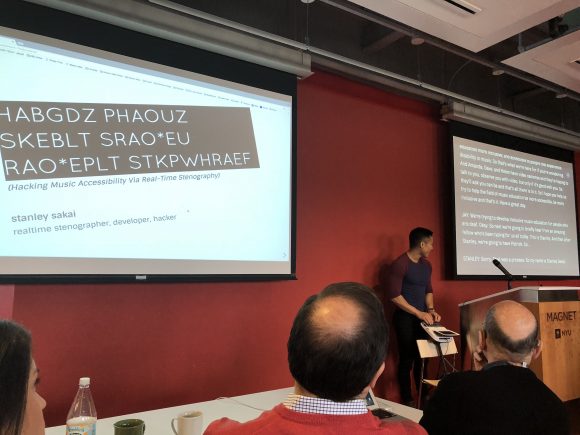

![On screen, it shows a bunch of dialogue and then parentheticals for [ Big Room House/EDM ] and [ Tiësto, Martin Garrix - "The Only Way Is Up" ].](http://blog.stanographer.com/wp-content/uploads/2018/04/C4_7WA5XAAEf-A8-580x363.jpg)